Self Service Infrastructure

2018-03-05

Why Implement Self Service Infrastructure

One of the many concepts of DevOps is the self service aspect of procuring new infrastructure for teams to work with. I think there are a number of ranges that these particular concept can be implemented. It can be from giving people direct access to their organizations cloud provider's dashboard through a mechanical request options to stand up a fully running instance of a product. Reasons for enabling this process are to keep team members from being blocked for what should be a routine or even trivial request in the cloud infrastructure age and from keeping operation administrators backlogs free of routine or even trivial requests. The common thread is to use another aspect of DevOps, being automation, to enable an automatic process to keep things moving forward for, again, routine or even trivial requests.

Portal Access

The raw, level zero, implementation of this would be the Azure or AWS portals where a team member can login and select one or more components, wait a few moments and start using their new environment. That is a good option to have for certain staff so that they can quickly stand something up to test something. This option can have issues in scenarios if the team member doesn't feel comfortable creating resources but is well versed in installing software on a a machine. Other issue might arise if expensive resources are created and left on after the need for those resources has passed. A large and powerful VM sitting idle still has a cost associated with it.

Predefined Templates

Keeping ARM templates and a deployment scripts in a read-only and well-known location would be a good first step in starting to install some guard rails so that at least common configurations can be captured. You need a VM and a database, so run this script and enter in your Azure username and password into the dialog that appears, wait until you see Complete and then login.

This option at least gives less experienced team members a way to keep off the backlog and keeps them moving forward with installing that vendor application that we want to test drive. This can also give developers a way to do the same thing with that latest server application they just about on HackerNews.

Even with this approach you can still end up with resource sprawl and the cash register still spinning.

Dashboard with Predefined Templates

Depending on the level of sophistication or level of effort you want to put into it a team could take the canned templates and establish a library that could be serviced from a custom web application. This could encapsulate the authentication of the subscription so that the team members claims would enable or deny the ability to create Azure resources.

Another benefit of having this initiated in a web application is that you can trace when resources were created and establish or rental or time to live of requested resources.

Infrastructure as Code for Creating Test Environments for Your Projects

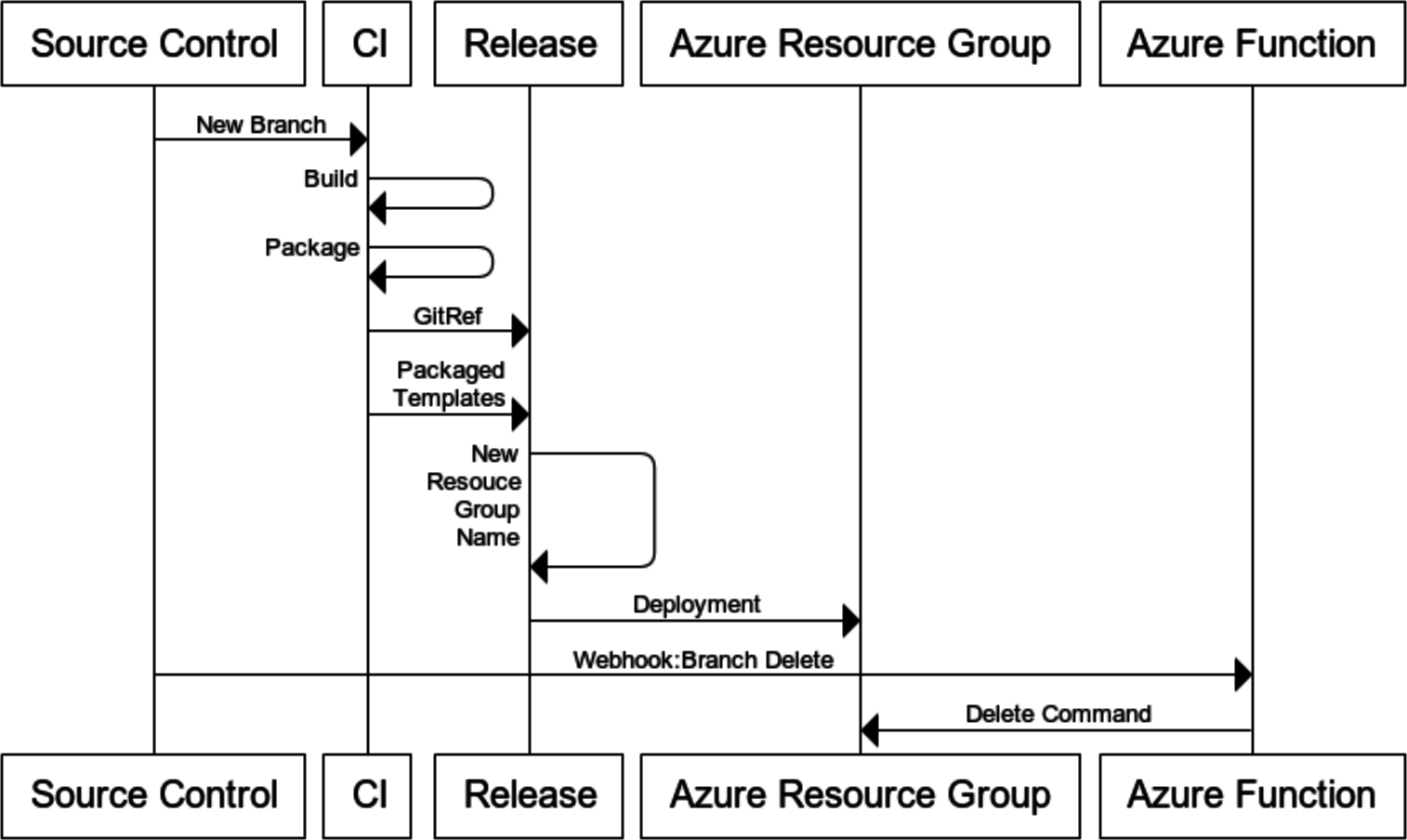

For projects I'm a firm believer that every check-in on every branch gets a CI build. I also like the approach of deploying every branch to an environment so that the infrastructure as code is also tested. This can capture any regressions in configuration and performance that might have been introduced in a given commit. I think this help with another principle in the union of Development and Operations in that you can shorted the feedback cycle on your changes. Not so much in validating the business hypothesis you are trying to prove but in the quality of the changes being made to a project.

Testing The Environment Per Branch Idea With VSTS

I am already putting Azure ARM Templates directly into my project's source tree so my CI build approach doesn't have to change. They get packaged and are pushed to my releases where they are deployed as part of a pre-flight for every release. Releases are heavily parameterized and those values are fed from release variables or a Azure KeyVault but there are a couple of tweaks that need to be made.

Usually I keep the Azure Resource Goup Name as a variable template, something like example-$(Release.EnvironmentName)-rg so that I have specific deployments for each environment such as example-ci-rg,example-dev-rg,example-uat-rg,example-prod-rg etc. In order to drive a unique environment based on a topic branch I need to inspect branch name.

$sourceBranchName = "$(Release.Artifacts.TestProject.SourceBranch)"

this gives us a value of refs/heads/feature/my-topic-branch which we can naively replacinate

$branchNameSafe = (($sourceBranchName -replace 'refs/heads/','') -replace '/','_')

to give us a value we can use as part of an Azure Resource Group Name. In this case feature_my-topic-branch.

Now we have a deployed resource group for each new topic branch that is created in our projects git repository.

This is going to get unwieldy very quickly so we need a way to remove resources that are no longer needed and preferably in an automated fashion.

Tagging Resource Groups

One of the additional changes I made to my deployments is to tag the resource group with the git source branch name. The primary reason is related to how the git branch delete is going to be processed.

Using VSTS Git Webhook to a Signal Deleted Branch

Setting up a VSTS Webhook is pretty easy and for my implentation of cleaning up resources I just pointed it to an Azure Function Webhook Trigger.

public static async Task Run(string triggerInput, TraceWriter log)

{

var commit = JsonConvert.DeserializeObject<Commit>(triggerInput);

log.Info($"Message indicates {commit.BranchName} ({commit.BranchNameSafe}) has been {commit.BranchAction}");

var clientId = Environment.GetEnvironmentVariable("key");

var clientSecret = Environment.GetEnvironmentVariable("secret");

var subscriptionId = Environment.GetEnvironmentVariable("targetSubscriptionId");

var tenantId = Environment.GetEnvironmentVariable("targetTenantId");

var credentials = SdkContext.AzureCredentialsFactory

.FromServicePrincipal(clientId, clientSecret, tenantId, AzureEnvironment.AzureGlobalCloud);

var azure = Azure

.Configure()

.WithLogLevel(HttpLoggingDelegatingHandler.Level.Basic)

.Authenticate(credentials)

.WithSubscription(subscriptionId);

var location = Region.USWest;

var branch = commit.BranchName;

string resourceGroupName = commit.BranchNameSafe;

if(commit.BranchAction == BranchAction.Destroyed){

log.Info($"Searching for resource group by branch tag {branch}");

var resourceGroups = await azure.ResourceGroups.ListByTagAsync("branch", branch);

if (resourceGroups.Count() == 1)

{

var name = resourceGroups.First().Name;

log.Info($"Removing resource group {name}");

await azure.ResourceGroups.DeleteByNameAsync(name);

log.Info($"Resource group {name} removed");

}

else if (resourceGroups.Count() > 1)

{

log.Info($"No delete can be performed because multiple resource group matches for branch tag {branch}");

}

else

{

log.Info($"No matching resource groups fround for branch tag {branch}");

}

}

}

The elevator pitch of this Azure Function is to inspect the webhook data for branch name the new and old gitref. If the ref goes from a hash to all zeros, 0000000000000000000000000000000000000000, then it is a delete, if it is all zeros to a hash then it is a create and lastly if the git ref goes from a hash to another hash it is just a normal commit.

For the clean up logic I'm only in a branch delete operation then I use the Azure SDK to query the resource groups by the tags name and if I get exactly one resource group from the target subscription I issue a delete command.

For authentication I'm using a service principle in the subscription and I'm also parameterizing the target subscription and tenant ids. I also have a class to serialize the webhook data but that could be changed to a JObject since I only have those three data points to fetch.

Here Be Dragons

Firstly, don't do this. Meaning don't do this in a subscription that is critical. A regression could easily be made and all of the resource groups could disappear.

I've warned you. Don't call me if something bad happens.

The new RBAC features in Azure might lessen the need to segregate some things by subscription but I think that production resource should be deliberately separate.

I've warned you. Don't call me if something bad happens. I mean it.

Other useful ideas for this

Another useful idea for this, specifically with product, would be for sales engineering or any other team members that need to do demos. They could request a new environment and have it prepared for a client demonstration with no concerns about throwing it away when it is over. Better yet it would be an emphasis that master is always deployable and forces everyone to think about quality as the quality of the the combination of your master branch the deployment process could affect sales. Also if your sales team has the ability to work with their temporarily sales branch and deployment they could even personalize the demo.